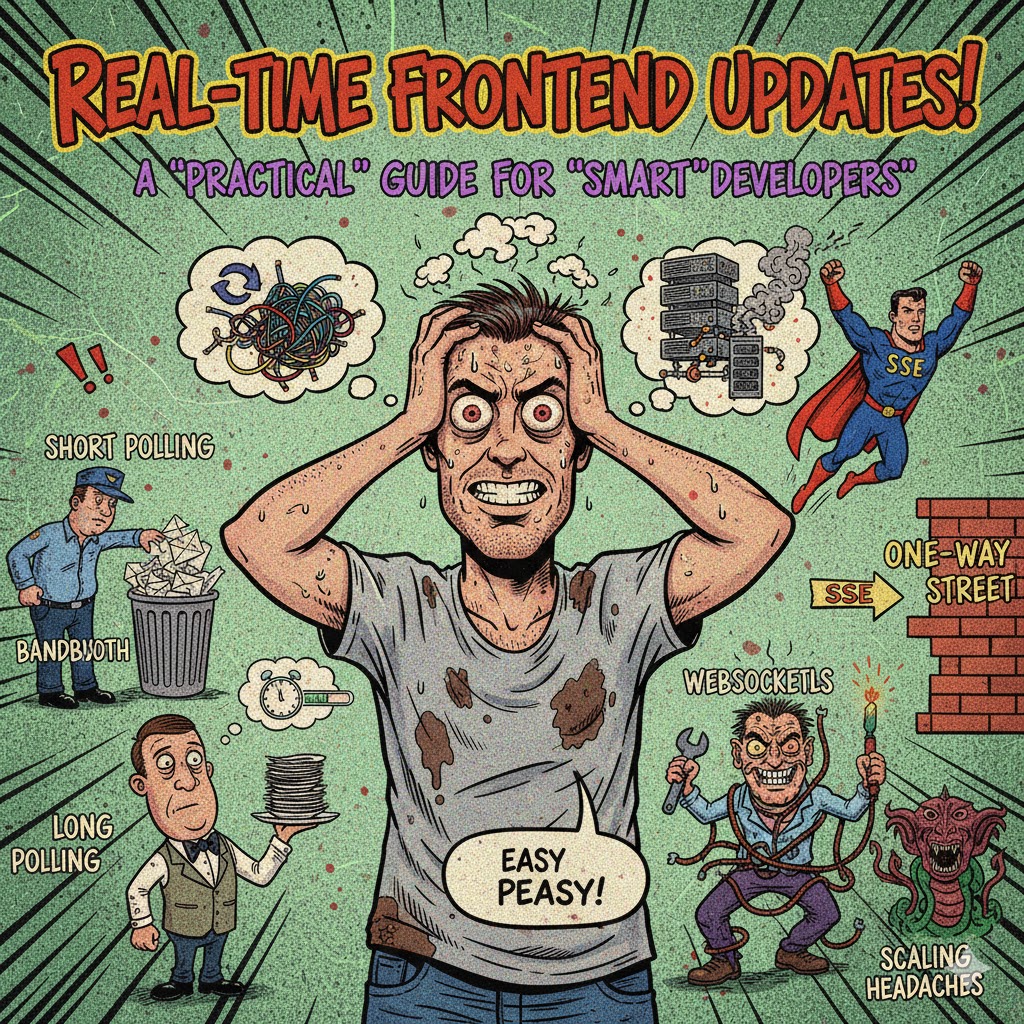

Real-Time Frontend Updates: A Practical Guide for Developers

Real-time updates are the magic that makes modern apps feel alive. Whether it's a chat bubble popping up instantly, a stock price ticking upward, or collaborators editing a document together, the UI needs to reflect new data without forcing users to hit refresh. As frontend developers, our job is to make this happen smoothly—balancing speed, reliability, and resource use. Think of the strategies we'll explore as a toolkit: pick the right one based on your app's needs, not just what's "fastest."

We'll break down the core approaches—pull-based (where the client asks) and push-based (where the server sends)—starting with the basics and diving into trade-offs. Real-world examples like LinkedIn using Server-Sent Events (SSE) for messaging or Stack Overflow relying on WebSockets for feed updates show that there's no one-size-fits-all. Interviews love when you explain why you chose something and why you skipped the alternatives.

Pull vs. Push: The Two Main Camps

Pull: The client checks in with the server. Simple, but can waste bandwidth.

Push: The server nudges the client when there's news. More efficient for frequent updates, but requires more setup.

An umbrella term here is Comet, which covers techniques for keeping an HTTP connection open so the server can push data (like long polling or SSE).

Efficiency isn't just about speed—consider battery life, browser support, scaling, and infrastructure headaches. WebSockets might seem like the hero, but they're overkill if you don't need two-way chat.

Short Polling: The Straightforward Starter

Imagine your app pinging the server every few seconds: "Any new data?" That's short polling in a nutshell.

How It Works

The client sets up a timer (say, every 5 seconds) to fetch updates via a standard HTTP request. The server responds right away, even if it's just "nothing new."

Pros

Dead simple to implement—no special server code needed.

Works over plain HTTP, no persistent connections.

Scales easily since requests are stateless (data lives in a shared database, not server memory).

Cons

Wastes network traffic with empty responses.

Misses updates that happen between polls.

Not truly real-time; feels periodic.

When to Use It

Perfect for fixed-interval checks, like analytics dashboards refreshing every minute or stock tickers that don't need sub-second precision. It's also a quick fix for legacy backends you can't touch, or as a temporary solution while building something fancier.

When to Skip

Avoid for random or rare updates (most requests are useless) or when true real-time is a must.

Scaling It

Load balancers can bounce requests to any server—the shared DB ensures consistency. No "sticky sessions" required.

Long Polling: Near-Real-Time with a Twist

Long polling upgrades short polling: the client asks, but the server holds the connection open until data arrives (or a timeout hits). Then it responds, and the client immediately reconnects.

How It Works

It's a clever HTTP hack—not a dedicated tech. The connection hangs until there's something to say.

Pros

Cuts useless requests compared to short polling.

Delivers near-real-time feels.

Works in almost every browser via basic XMLHttpRequest.

Often a fallback for WebSockets/SSE in old browsers.

Plays nice with HTTP/2 features.

Cons

Ties up server resources with open connections.

Proxies might cut idle connections.

Slight delay if an update lands during reconnection.

Needs a message queue to avoid losing data mid-handshake.

More backend effort than short polling.

When to Use It

Great for near-real-time across all browsers, especially with infrequent messages. Think social feeds, basic analytics, or stock apps where a second's delay is fine.

Scaling Challenges

If servers are stateful (holding data in memory), load balancers might send the next request to a different server that "forgets" the context. Solutions:

Sticky sessions: Route the same client to one server. Easy but risky—uneven load, data loss on crashes.

Shared storage (e.g., Redis or DB): Make servers stateless. Safer for critical data, but harder to set up.

Stateful vs. Stateless: Quick Refresher

Stateful: Server remembers stuff between requests (e.g., in-memory sessions). Dynamic but tricky to scale.

Stateless: Each request stands alone; data comes from elsewhere (e.g., DB or tokens). Simpler, more scalable.

In backend terms: Stateful = traditional sessions; Stateless = REST APIs with JWTs. In frontend (React/etc.): Stateful components manage their own data; stateless ones just render props.

Server-Sent Events (SSE): Push Over HTTP

SSE is a proper push tech: the client opens a one-way HTTP channel, and the server streams updates as they happen. It's Comet in action.

How It Works

A persistent connection where the server sends text events (with IDs for retries).

Pros

True real-time with minimal delay.

HTTP-based, so proxy-friendly and supports HTTP/2 multiplexing (multiple streams on one TCP connection).

Auto-reconnects if dropped.

Low overhead (~5 bytes per message).

Battery-efficient on mobiles (device can sleep between pushes).

Easy to polyfill.

Cons

One-way only (server to client).

More overhead than WebSockets.

Limited to ~6 connections per browser in HTTP/1.

Needs CORS for cross-domain.

When to Use It

Ideal for server-driven updates at medium frequency: notifications, live sports scores, crypto prices, progress bars, or server monitoring. LinkedIn uses it for IM—proving you don't always need bidirectional.

When to Skip

If you need client-to-server pushes (go WebSockets) or ultra-frequent messages where every byte counts.

WebSockets: Full-Duplex Powerhouse

WebSockets ditch HTTP for a separate protocol (ws/wss), creating a two-way pipe for instant chatter.

How It Works

Starts with an HTTP handshake, then upgrades to a persistent, low-overhead channel. Supports text or binary data.

Pros

Bidirectional native support.

Super low latency and overhead.

Great browser support.

Handles binary efficiently (e.g., images in games).

No CORS hassles for cross-domain.

Cons

Complex setup and manual reconnection logic.

Non-HTTP protocol—firewalls/proxies might block.

No HTTP/2 multiplexing; each connection needs its own TCP.

Scaling headaches (more on this below).

When to Use It

When you truly need two-way, high-speed comms: chats, multiplayer games, collaborative editors like Figma or Google Docs. Stack Overflow uses it for feeds despite one-way needs—sometimes efficiency wins.

When to Skip

Overkill for one-way pushes; risky if your infra isn't WebSocket-ready.

WebSockets Scaling and Gotchas

Multiplexing Woes

One TCP per connection means multiple tabs or chat rooms explode resource use. Fixes:

Fall back to SSE/long polling (HTTP/2 multiplexes).

Shared Workers (spotty support).

Broadcast Channel API (one tab handles the socket, shares via messaging).

Local/Session Storage with change detection.

Accept independent tabs.

Infrastructure Hurdles

Proxies often ignore ws. Use wss (encrypted) to sneak past.

Authentication Tricks

No Authorization header support. Options:

Query params in URL (encrypted with wss, but logs might leak).

Short-lived tokens from a separate service.

Send token in first message (DoS risk).

Cookies (same-domain only).

Custom subprotocol header.

Wrapping Up: Choose Wisely

Use frameworks like Socket.IO for auto-fallbacks (WebSockets → long polling). Always encrypt with TLS. Reserve WebSockets for genuine full-duplex needs—don't default to them unconsciously.

Strategy | Best For | Wins | Pain Points |

|---|---|---|---|

Short Polling | Fixed intervals; non-real-time | Simple, scalable | Wasteful traffic; no real-time |

Long Polling | Near-real-time; broad support | HTTP-friendly; fallback king | Connection delays; overhead |

SSE | One-way real-time; medium freq | Auto-reconnect; battery-savvy | One-way only; some overhead |

WebSockets | Bidirectional; ultra-fast | Efficient; low latency | Infra issues; no multiplexing |

Pick based on your app's reality—users will thank you for the smooth experience.